Graph all the things

analyzing all the things you forgot to wonder about

President Rankings

2017-05-11

interests: US history, unsupervised learning, interactive visualizations

Some historians find it fun to rank presidents, and they do this regularly in the Sienna Research Institute Presidents Study, ranking all past presidents on 20 different criteria. I noticed that the rankings are heavily redundant. Just look at "overall ability" versus "executive ability" - that's 96% correlation! To make a bit more sense of this 20-dimensional data (a ranking for each category for each president), I plotted each president's rankings in 20-dimensional space, then rotated and recentered them so that the axes line up with the directions the data varies in.

Click on a president to follow him, and compare different axes!

This is called Principal Component Analysis (PCA). The resulting dimensions are ranked from most to least descriptive, and the first (most descriptive) one is called the principal component.

My takeaways from this are:

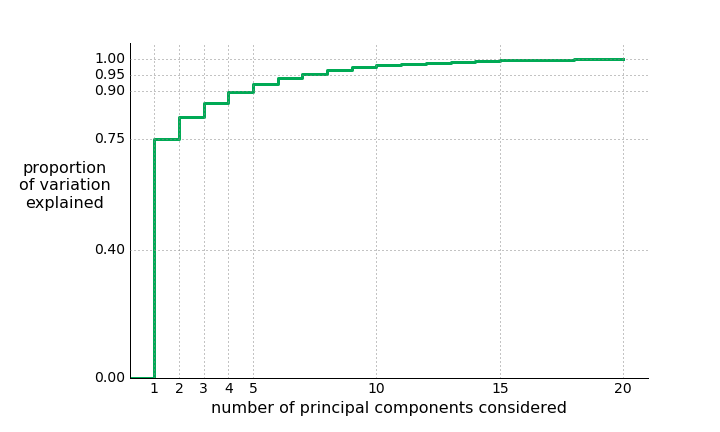

- The most descriptive quality for a president is how good they generally were. This axis alone explains 75% of the dataset's variation.

- The 2nd most descriptive quality for a president is how much more redeeming their qualities were than their successes.

- The 3rd most descriptive quality for a president is the extent to which they made cautious (and ethical) decisions without collaboration/leadership.

- The 4th most descriptive quality for a president is the extent to which they made cautious decisions with collaboration/leadership.

- Any components after that are either too hard to interpret or basically just noise; the remaining 16 only constitute 10% of the dataset's variation. Perhaps these first 4 components are the only true criteria we should bother asking historians.

- Lyndon B. Johnson is the most interesting president.

Here's the proportion of the data that's conveyed by the first components:

Math and Intuition for PCA

This part relies on knowledge of covariance and basic linear algebra.

Let be a random variable in

with

covariance matrix

(in this case,

and the variables are the rankings, so the covariance is Spearman's rank covariance). The principal components of the probability distribution are the normalized eigenvectors

of

. They have important properties that make PCA meaningful:

- The most descriptive component is the one with the largest corresponding eigenvalue. In general, the eigenvalue

corresponding to

is the variance of the distribution of

in the direction of

; that is,

. This gives a nice easy way to rank the components from most to least descriptive.

- The principal components are uncorrelated;

for

. This is what makes the data appear to line up with the axes.

In fact, PCA can be derived from that last bullet. If you remember some basic formulas for covariance,